Kinect 4 Windows v2 – Custom Gestures in Unity

I have received a few questions recently about detecting Kinect custom gestures in Unity 3D and it seems that there are a few issues with getting this up and running. I have previously posted about Kinect Custom Gestures and also using ‘built-in’ gestures from the Kinect 4 Windows SDK inside Unity so it makes sense to complete the loop here. I’ll run through my experience. A few things to set up straight off the bat:

I created a new Unity project and selected to install the Visual Studio 2013 Tools Unity Package (not required but I like to debug using Visual Studio)

I imported some Unity Kinect custom packages: Assets > Import Package > Custom Package and then navigated to the Kinect Unity Package location on my local disk. (the Kinect unity package can be downloaded here http://go.microsoft.com/fwlink/?LinkID=513177). Add both the Kinect and Kinect.VisualGestureBuilder packages.

Creating the Scene

As before, in this previous post I created an empty game object and also a new c# script and added the following code to detect the Kinect sensor, pull the body data, exposing it to other scripts and also to retrieve the body tracking ID which will be required to identify the body on which we are detecting the gestures. To keep things simple I assumed only one tracked body and as always remember this is intended as a sample so I may not be as careful with cleaning up event handlers, etc.

- public class BodySourceManager : MonoBehaviour

- {

- private KinectSensor _Sensor;

- private BodyFrameReader _Reader;

- private Body[] _Data = null;

- private ulong _trackingId = 0;

- public CustomGestureManager GestureManager;

- public Body[] GetData()

- {

- return _Data;

- }

- void Start()

- {

- _Sensor = KinectSensor.GetDefault();

- if (_Sensor != null)

- {

- _Reader = _Sensor.BodyFrameSource.OpenReader();

- if (!_Sensor.IsOpen)

- {

- _Sensor.Open();

- }

- }

- }

- void Update()

- {

- if (_Reader != null)

- {

- var frame = _Reader.AcquireLatestFrame();

- if (frame != null)

- {

- if (_Data == null)

- {

- _Data = new Body[_Sensor.BodyFrameSource.BodyCount];

- }

- _trackingId = 0;

- frame.GetAndRefreshBodyData(_Data);

- frame.Dispose();

- frame = null;

- foreach (var body in _Data)

- {

- if (body != null && body.IsTracked)

- {

- _trackingId = body.TrackingId;

- if (GestureManager != null)

- {

- GestureManager.SetTrackingId(body.TrackingId);

- }

- break;

- }

- }

- }

- }

- }

- void OnApplicationQuit()

- {

- if (_Reader != null)

- {

- _Reader.Dispose();

- _Reader = null;

- }

- if (_Sensor != null)

- {

- if (_Sensor.IsOpen)

- {

- _Sensor.Close();

- }

- _Sensor = null;

- }

- }

- }

The only difference from the previous post here is the call to set the tracking ID. Next, I added another script to load the custom gesture database and detect the gestures:

- public class CustomGestureManager : MonoBehaviour

- {

- VisualGestureBuilderDatabase _gestureDatabase;

- VisualGestureBuilderFrameSource _gestureFrameSource;

- VisualGestureBuilderFrameReader _gestureFrameReader;

- KinectSensor _kinect;

- Gesture _salute;

- Gesture _saluteProgress;

- ParticleSystem _ps;

- public GameObject AttachedObject;

- public void SetTrackingId(ulong id)

- {

- _gestureFrameReader.IsPaused = false;

- _gestureFrameSource.TrackingId = id;

- _gestureFrameReader.FrameArrived += _gestureFrameReader_FrameArrived;

- }

- // Use this for initialization

- void Start ()

- {

- if (AttachedObject != null)

- {

- _ps = AttachedObject.particleSystem;

- _ps.emissionRate = 4;

- _ps.startColor = Color.blue;

- }

- _kinect = KinectSensor.GetDefault();

- _gestureDatabase = VisualGestureBuilderDatabase.Create(Application.streamingAssetsPath + "/salute.gbd");

- _gestureFrameSource = VisualGestureBuilderFrameSource.Create(_kinect, 0);

- foreach (var gesture in _gestureDatabase.AvailableGestures)

- {

- _gestureFrameSource.AddGesture(gesture);

- if (gesture.Name == "salute")

- {

- _salute = gesture;

- }

- if (gesture.Name == "saluteProgress")

- {

- _saluteProgress = gesture;

- }

- }

- _gestureFrameReader = _gestureFrameSource.OpenReader();

- _gestureFrameReader.IsPaused = true;

- }

- void _gestureFrameReader_FrameArrived(object sender, VisualGestureBuilderFrameArrivedEventArgs e)

- {

- VisualGestureBuilderFrameReference frameReference = e.FrameReference;

- using (VisualGestureBuilderFrame frame = frameReference.AcquireFrame())

- {

- if (frame != null && frame.DiscreteGestureResults != null)

- {

- if (AttachedObject == null)

- return;

- DiscreteGestureResult result = null;

- if (frame.DiscreteGestureResults.Count > 0)

- result = frame.DiscreteGestureResults[_salute];

- if (result == null)

- return;

- if (result.Detected == true)

- {

- var progressResult = frame.ContinuousGestureResults[_saluteProgress];

- if (AttachedObject != null)

- {

- var prog = progressResult.Progress;

- float scale = 0.5f + prog * 3.0f;

- AttachedObject.transform.localScale = new Vector3(scale, scale, scale);

- if (_ps != null)

- {

- _ps.emissionRate = 100 * prog;

- _ps.startColor = Color.red;

- }

- }

- }

- else

- {

- if (_ps != null)

- {

- _ps.emissionRate = 4;

- _ps.startColor = Color.blue;

- }

- }

- }

- }

- }

- }

This code is more or less the same as the code here /kinect/unity/winrt/2014/11/21/kinect-4-windows-v2-custom-gestures-in-unity.html.

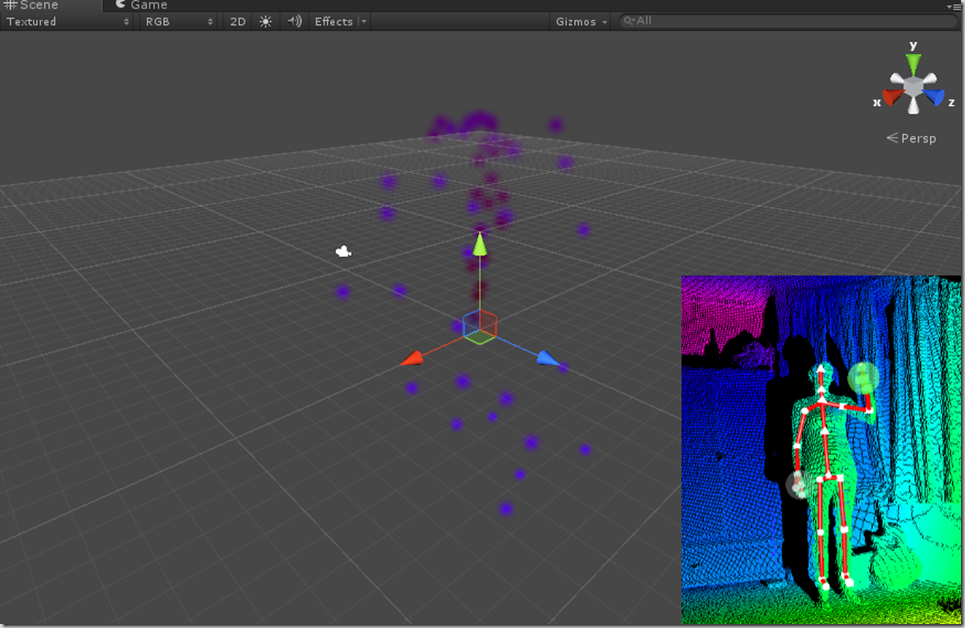

Attaching a Game Object

Once this script is in place you can set the ‘AttachedObject’ variable via the Unity3D UI by dragging a game object onto the field on the property editor. I created a particle system for the sample and dragged it on after changing some of the properties. When a gesture is detected I use the progress value to alter some properties on the particle system; changing colours and emission rate.

Accessing the Gesture Database

Notice that the code above imports the .gbd file from my previous post which contains a discrete gesture ‘salute’ and a continuous gesture ‘salute progress’. To access the database you can use using the streamingAssetsPath provided by Unity to gain access to local resources; more details here.

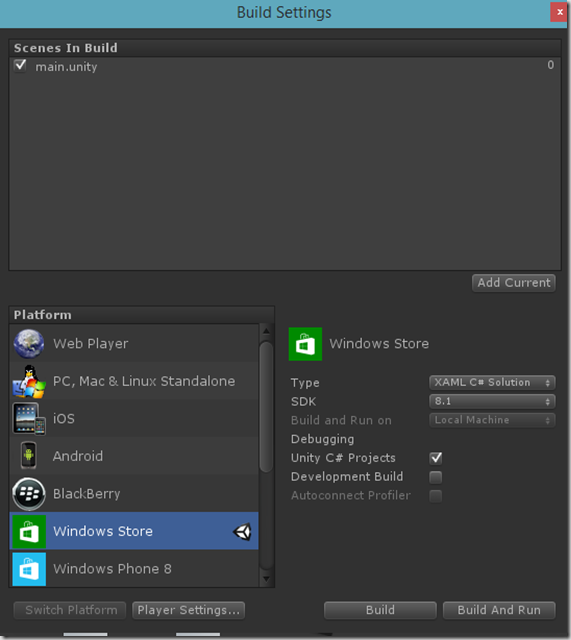

Build settings

You can use the Unity File > Build Settings… dialog to configure the output project (in this case Windows Store output).

Building this will generate the Windows Store App project which you can load into Visual Studio and run\debug as required. At this stage it is important to remember to do the following:

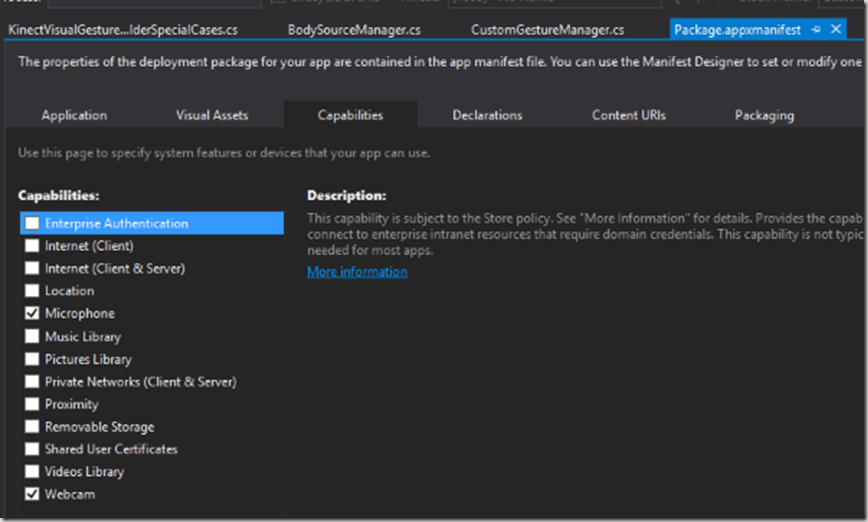

Add Webcam and Microphone capabilities to your app by editing the Windows Store app package manifest

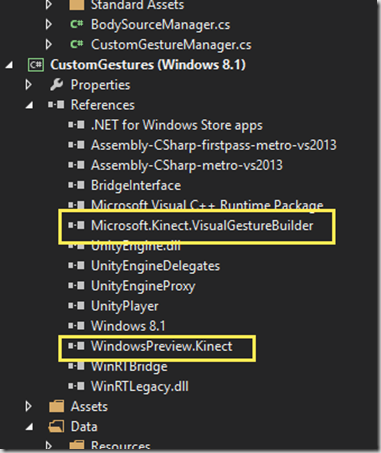

Add references to the app to WindowsPreview.Kinect and Microsoft.Kinect.VisualGestureBuild

These last two have caught me out on a number of occasions and it’s not always obvious when this is the cause of problems.

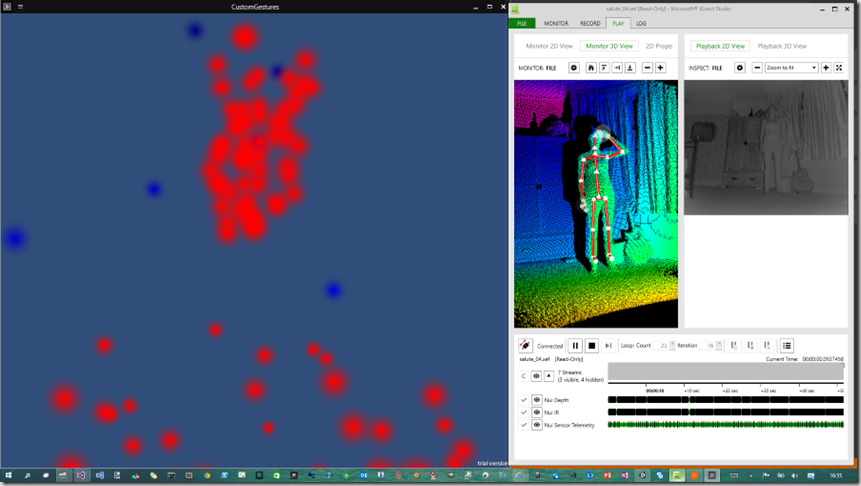

This shows the final result captured mid-salute.

Find the sample project here.

Other useful resources:

Another working sample - https://github.com/carmines/workshop/blob/dev/Unity/VGBSample.unitypackage

Comments