Visual Gesture Builder – Kinect 4 Windows v2

When I created a previous project for a multi-user drum kit when it was time to code the gesture for a user hitting a drum I used a heuristic detector as it was to be used as a simple demo and it was the only quick option. By ‘heuristic detector’ I simply mean that as I tracked the position of each hand of a tracked skeleton I created some conditional code to detect whether the hand passed through the virtual drum – effectively, collision detection code in 3d space. It worked okay for my scenario but suffers from some issues:

The height of the hit point was fixed in 3d space

Everyone hits the drums differently

The latter could be fixed by extending the heuristic to adapt to the users skeleton height and arm reach, etc. but as more and more details are considered it is not hard to imagine the tests becoming complicated and generating many lines of code. Imagine for example, that you wanted to detect a military-style salute in your game/app, which criteria would you watch for in your heuristic? angle between wrist and shoulder? proximity of hand to head? It’s a bit of a thought exercise to imagine which criteria would give the best results and this is a fairly simple gesture.

Kinect Skeletal tracking is powered by machine learning techniques as outlined here Real-Time Human Pose Recognition in Parts from a Single Depth Image and also explained in this video https://www.youtube.com/watch?v=zFGPjRPwyFw. If you are completely new to machine learning then there are some full introductory courses available online here https://www.coursera.org/course/ml and here https://www.coursera.org/course/machlearning. These techniques enable the Kinect system to identify parts of the human body and subsequently joint positions from the Kinect depth data in real time.

Machine learning techniques can also be used to detect gestures. The Kinect for Windows team have exposed these techniques to allow you create your own gesture detection.

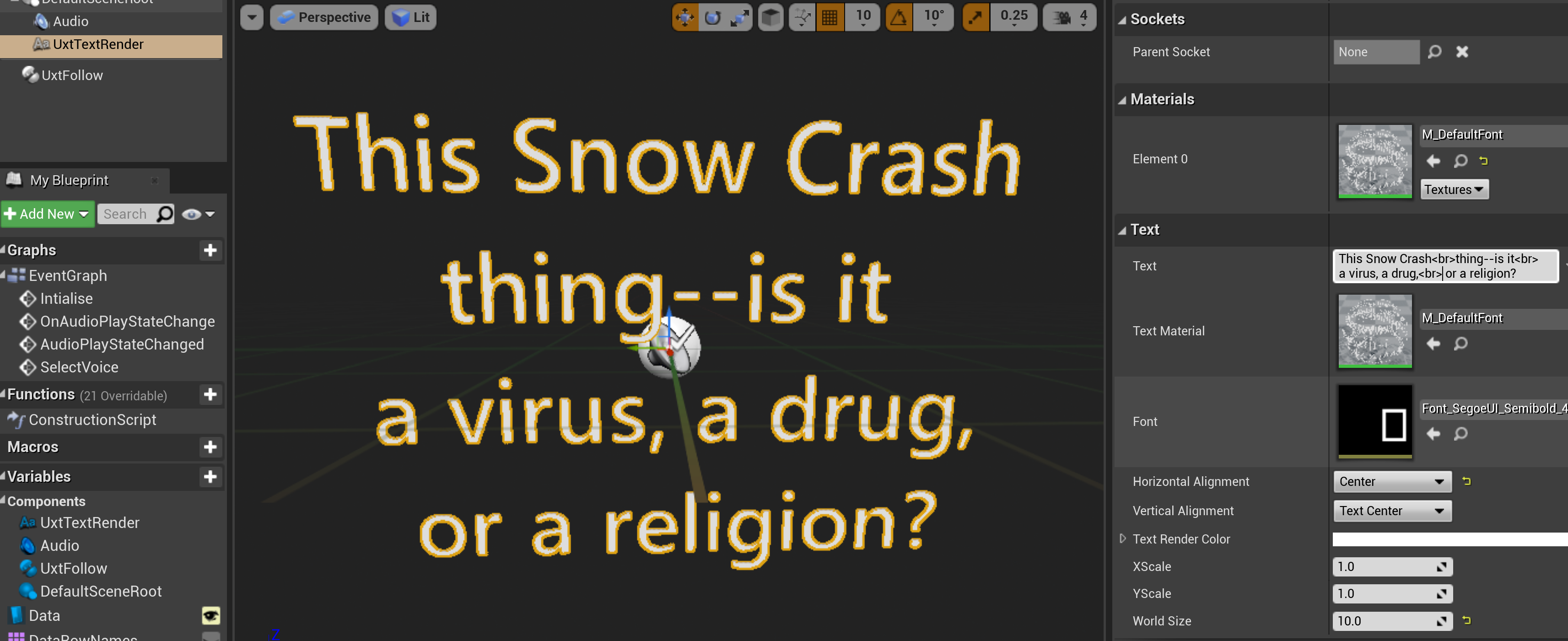

Visual Gesture Builder

Enter the Visual Gesture Builder (VGB) and its ability to facilitate machine learning techniques into your own gestures. The ML techniques utilise recorded and tagged data – the more data showing positive and negative behaviours in relation to your required gesture the better. One clip of recorded data can be enough to see a result but won’t work in real-world scenarios. In addition it is a common practice to split the data into a training set and also a set which can be used to verify that the trained system is working as expected. With those things in mind let’s take a look at how to record and tag data for use in the VGB.

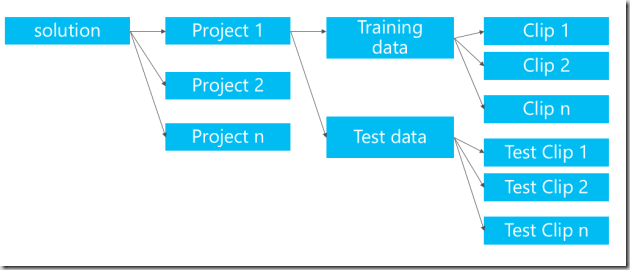

If you haven’t played with Kinect Studio please refer to Kinect for Windows V2 SDK: Kinect Studio–the “Swiss Army Knife” over at Mike Taulty’s blog as this tool enables you to record clips required as data inputs to VGB. VGB uses skeleton data so you need to ensure that you record that stream into your clips. Once you have recorded some clips including your gestures you can import them into a VGB solution. So open VGB and create a new solution, to the solution you can add projects; one for each gesture you want to detect. This shows the solution structure:

As you can see each project is split into training and test data – this will look something like this inside VGB itself:

The training clips should be added to the node with a .a denoting that it is for analysis. To get started or just to experiment, right-click on your empty solution node and choose ‘Create New Project with Wizard’ which will walk you through the available options.

Discrete vs Continuous Gestures

The main choice you will need to make is whether you want to create a discrete or continuous gesture. A discrete gesture is boolean in nature in that it is either happening or not with an associated confidence value whereas a continuous gesture provides a progress value and allows you to track progress optionally through multiple discrete gestures. If you are experimenting just pick a discrete gesture to try as it is the simplest but it is worth knowing that you can compose multiple discrete gestures and track progress through them, for example, you might do this for a golf swing gesture. For my test project I started by detecting a discrete military salute gesture and later I added a continuous detector as well.

Once you have added a project with the wizard you can add a clip by right-clicking on the project and from there you can add clips to the project with a right click. With a clip added you need to tag the clip to mark at which points the gesture is active. You can move the timeline over the clip and mark sections using keyboard shortcuts which are displayed on the app.

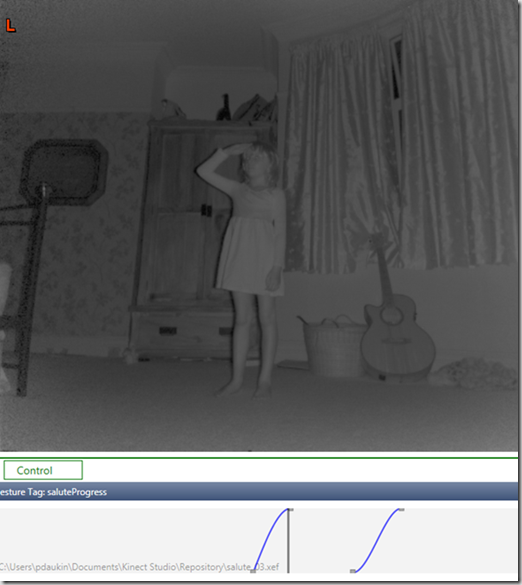

In the case of a ‘continuous’ gesture a spline on which you can set the points to define the shape is used to define the progress through the gesture:

At this stage it is a bit fiddly to carry out the tagging but this will improve as the product moves out of preview status. Whilst we are just experimenting here for a real app using this technique the more data you have and the better the tagging of that data is the better the result will be. If there are certain behaviours that you don’t want to detect in your gesture you can ‘train’ those out by not tagging them so the algorithm can learn some negative examples as well as positive. When you have tagged your clips you can build the gesture database by either building the solution or a single project. Whilst building VGB spits out some interesting information into its output window. This information includes details of features considered by the algorithm including a top ten features list. The features are very enlightening and give an insight into your particular gesture – these are the top few considered for my ‘military salute’

Top 10 contributing weak classifiers:

AngleVelocity( HandLeft, Head, HandRight ) rejecting inferred joints, fValue >= 0.500000, alpha = 0.815500

Angles( WristLeft, Head, WristRight ) using inferred joints, fValue >= 42.000000, alpha = 0.301555

MuscleTorqueX( HipLeft ) using inferred joints, fValue >= 0.699997, alpha = 0.235265…

</blockquote>These could also help you to understand criteria for a gesture if you wanted to code up a heuristic solution.

If you have added any tagged test clips these will be analysed as part of the build and stats about false positives and false negatives will get logged to the output window.

The result of the build is a database (with a gbd file extension) which can be imported into an app using the Kinect SDK and used to detect your gesture. I’ll post the code for doing this for a windows store app in a subsequent post.

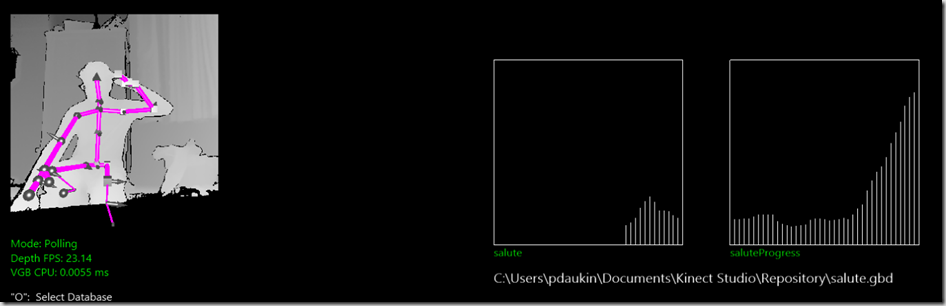

In order to test your gesture without writing the code you can use the ‘Live Preview’ feature in VGB. This is located in the File menu as Live Preview…You will need your Kinect plugged in for this as it provides live feedback for detection based on your gesture database and it looks like this:

With the graphs displaying progress for the continuous gesture and confidence for the discrete one. I’ll close this post out by saying that these tools, despite being in preview mode are well thought out around testing scenarios by providing repeatable input data and allow changes in the underlying algorithms to be re-tested against a set of gestures easily.

Further information

Whitepaper - Visual Gesture Builder- A Data-Driven Solution to Gesture Detection

Video Tutorial - Custom Gestures End to End with Kinect and Visual Gesture Builder Part 1

Video Tutorial - Custom Gestures End to End with Kinect and Visual Gesture Builder (part 2)

Comments