Kinecting XAML + Unity

Following on from my previous post where I showed how easy it is to interact with Kinect data inside the unity 3D environment I wondered how I might manipulate 3D game objects using Kinect gestures within a Windows store app. (Before I begin I would like to point out that the Kinect integration is only available with Unity Pro version). Also, if you want to follow along you will need to get the Kinect Unity packages and also the Unity Visual Studio Tools. These packages can be added from the Unity Project wizard or can be later added using the Assets > Import Package menu.

I wanted to start by leveraging the built-in gestures that the Kinect SDK uses in its Controls Basics samples. There are samples in the Kinect v2 SDK for each of WPF, XAML and DX; the gesture support has been integrated into the ui frameworks for WPF and XAML but the Direct X sample is more low-level and shows how to use gesture recognizers directly.

I decided to leverage the Unity to XAML communication which is outlined in the Unity sample here http://docs.unity3d.com/Manual/windowsstore-examples.html and use the higher level gesture support in XAML. In case you were wondering when you create a Windows store app for Unity it is created as either a c# XAML + Direct X app or a c++ XAML Direct X app. What this means is a standard XAML app which has a SwapChainPanel which hosts the Unity content. The SwapChainPanel is a control which can sit anywhere in a the visual tree and can render Direct X graphics.

Setting the Scene

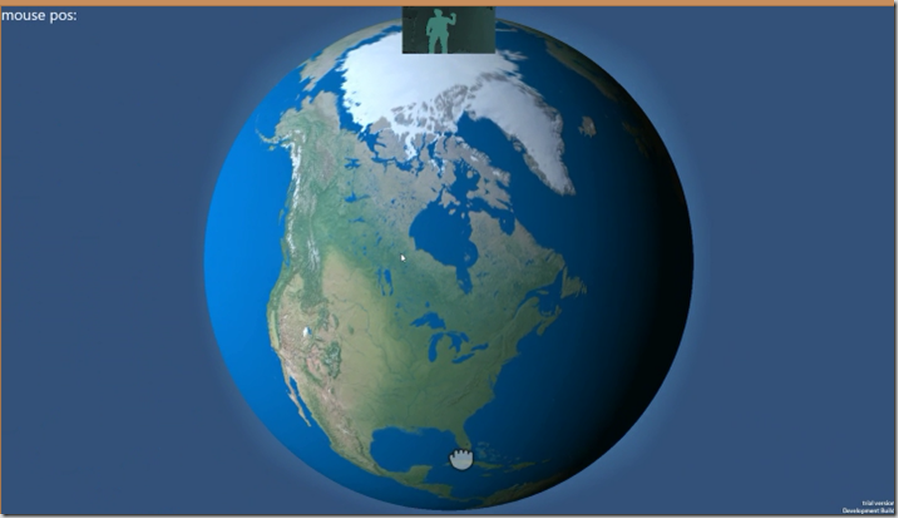

I started by looking through the Unity Assets store for a suitable 3D model and while browsing through the catalogue I found this free 3D earth model:

So, I imported this and added it to my scene in Unity. Now, I’m a beginner with Unity so at this point I wanted to get a better feel for how to interact with GameObjects in a scene, how this all translates back down to a Windows Store and Windows Phone app and also how to debug using Visual Studio.

Mouse/Camera

I thought that a basic scenario would be adding the ability to orbit the camera around the 3D model and then later I could think about how to achieve this using kinect gestures. To that end I added the standard Unity MouseOrbit script to the MainCamera in my scene and selected my earth model as the ‘look-at’ target. So, so far I’ve just pressed on a few buttons and I have a camera orbiting a 3D earth; let’s see if it runs as a Windows Store app.

Windows Store

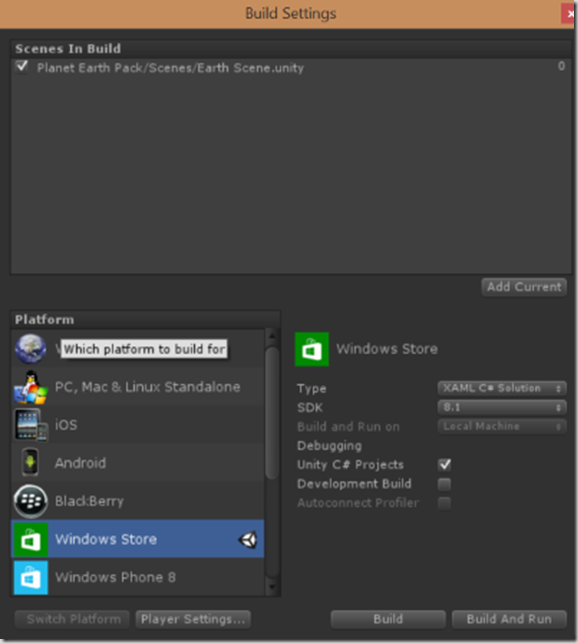

To run/debug a windows store app from this Unity scene you can select File > Build Settings and you will see the dialog below:

I set some of the settings, added the scene here and chose ‘Build’. I chose a folder to store the project files and Unity created me a VS solution with my store app code. I opened this up in VS and one thing you will need to do before running the app is to choose a processor architecture as ‘Any CPU’ is not sufficient. I usually choose x86 while developing as the VS designer won’t work with x64. So with that selected you can hit F5 and the app will run. So at this stage I had an earth which I could orbit around with the camera. I also tweaked the mouse orbit script a little to provide a zoom in/out as I would want this functionality later when I use Kinect.

From Mouse to Kinect

So for the XAML side of things what I needed was a way to recognise gestures and pass that information through to the Unity Scene. The way to detect built-in gestures using XAML is to add a KinectRegion to the visual tree which will detect IKinectControl derived controls within it and along with a little more plumbing you can code a class which receives manipulation events corresponding to gestures. Here are the steps and the basic code I used to get to this stage:

Create an IKinectControl-derived Usercontrol that I could use inside a KinectRegion which would cover the whole screen.

- public sealed partial class UnityGestureProxyControl : UserControl, IKinectControl

- {

- public UnityGestureProxyControl()

- {

- this.InitializeComponent();

- }

- public IKinectController CreateController(IInputModel inputModel, KinectRegion kinectRegion)

- {

- inputModel.GestureRecognizer.GestureSettings |=

- WindowsPreview.Kinect.Input.KinectGestureSettings.ManipulationScale |

- WindowsPreview.Kinect.Input.KinectGestureSettings.KinectHold;

- return new OrbitController((ManipulatableModel)model, this, kinectRegion);

- }

- public bool IsManipulatable

- {

- get { return true; }

- }

- public bool IsPressable

- {

- get { return false; }

- }

- }

The IKinectControl interface has two properties allowing querying the type of control ‘manipulatable’ or ‘pressable’ or both and CreateController which returns an object implementing IKinectController. I created a custom class implementing the required interfaces and used it to hook up the input model to the manipulation events received in response to the gesture recognition.

- public class OrbitController : IKinectManipulatableController, IDisposable

- {

- private ManipulatableModel _model;

- private UnityGestureProxyControl _unityGestureProxyControl;

- float distance = 50.0f;

- GameObject _earth;

- bool _manipulating;

- KinectRegion _region;

- public OrbitController(ManipulatableModel model, UnityGestureProxyControl unityGestureProxyControl, KinectRegion region)

- {

- _region = region;

- _model = model;

- _unityGestureProxyControl = unityGestureProxyControl;

- if (UnityPlayer.AppCallbacks.Instance.IsInitialized())

- {

- Initialise();

- }

- else

- {

- UnityPlayer.AppCallbacks.Instance.Initialized += () => { Initialise(); };

- }

- }

- public void Initialise()

- {

- UnityPlayer.AppCallbacks.Instance.InvokeOnAppThread(new UnityPlayer.AppCallbackItem(() =>

- {

- _earth = GameObject.Find("Earth16128Tris");

- _unityGestureProxyControl.Dispatcher.RunAsync(Windows.UI.Core.CoreDispatcherPriority.Normal,

- () =>

- {

- _model.ManipulationStarted += _model_ManipulationStarted;

- _model.ManipulationUpdated += _model_ManipulationUpdated;

- _model.ManipulationCompleted += _model_ManipulationCompleted;

- });

- }

- ), false);

- }

- void _model_ManipulationStarted(IManipulatableModel sender, WindowsPreview.Kinect.Input.KinectManipulationStartedEventArgs args)

- {

- _manipulating = true;

- }

- float x = 0.0f;

- float y = 0.0f;

- public float xSpeed = 1.0f;

- public float ySpeed = 1.0f;

- float scale = 1.0f;

- void _model_ManipulationUpdated(IManipulatableModel sender, WindowsPreview.Kinect.Input.KinectManipulationUpdatedEventArgs args)

- {

- if (_manipulating == true && _earth != null)

- {

- distance *= args.Delta.Scale;

- var windowPoint = InputPointerManager.TransformInputPointerCoordinatesToWindowCoordinates(args.Delta.Translation, _region.Bounds);

- x += (float)windowPoint.X * 0.2f;

- y += (float)windowPoint.Y * 0.2f;

- y = MainPage.ClampAngle(y, -20.0f, 80.0f);

- Quaternion rotation = Quaternion.Euler(y, x, 0);

- Vector3 negDistance = new Vector3(0.0f, 0.0f, -distance);

- Vector3 position = rotation * negDistance + _earth.transform.position;

- UnityPlayer.AppCallbacks.Instance.InvokeOnAppThread(new UnityPlayer.AppCallbackItem(() =>

- {

- Camera.current.transform.rotation = rotation;

- Camera.current.transform.position = position;

- }

- ), false);

- }

- }

- void _model_ManipulationCompleted(IManipulatableModel sender, WindowsPreview.Kinect.Input.KinectManipulationCompletedEventArgs args)

- {

- _manipulating = false;

- }

- public Microsoft.Kinect.Toolkit.Input.ManipulatableModel ManipulatableInputModel

- {

- get { return _model; }

- }

- public FrameworkElement Element

- {

- get { return _unityGestureProxyControl; }

- }

- public void Dispose()

- {

- _model.ManipulationStarted -= _model_ManipulationStarted;

- _model.ManipulationUpdated -= _model_ManipulationUpdated;

- _model.ManipulationCompleted -= _model_ManipulationCompleted;

- }

- }

This class illustrates hooking up the manipulation events and marshaling them over to the Unity environment. I wasn’t happy with the resulting camera orbiting behaviour as I suspect I have made some wrong assumptions when porting the math from the original mouseOrbit script so I may re-visit this in future. Finally, this xaml needs to be positioned within the mainPage.xaml:

- <k:KinectUserViewer Width="200" Height="100" VerticalAlignment="Top"></k:KinectUserViewer>

- <k:KinectRegion>

- <Grid>

- <local:UnityGestureProxyControl Background="Aqua"></local:UnityGestureProxyControl>

- </Grid>

- </k:KinectRegion>

Conclusions

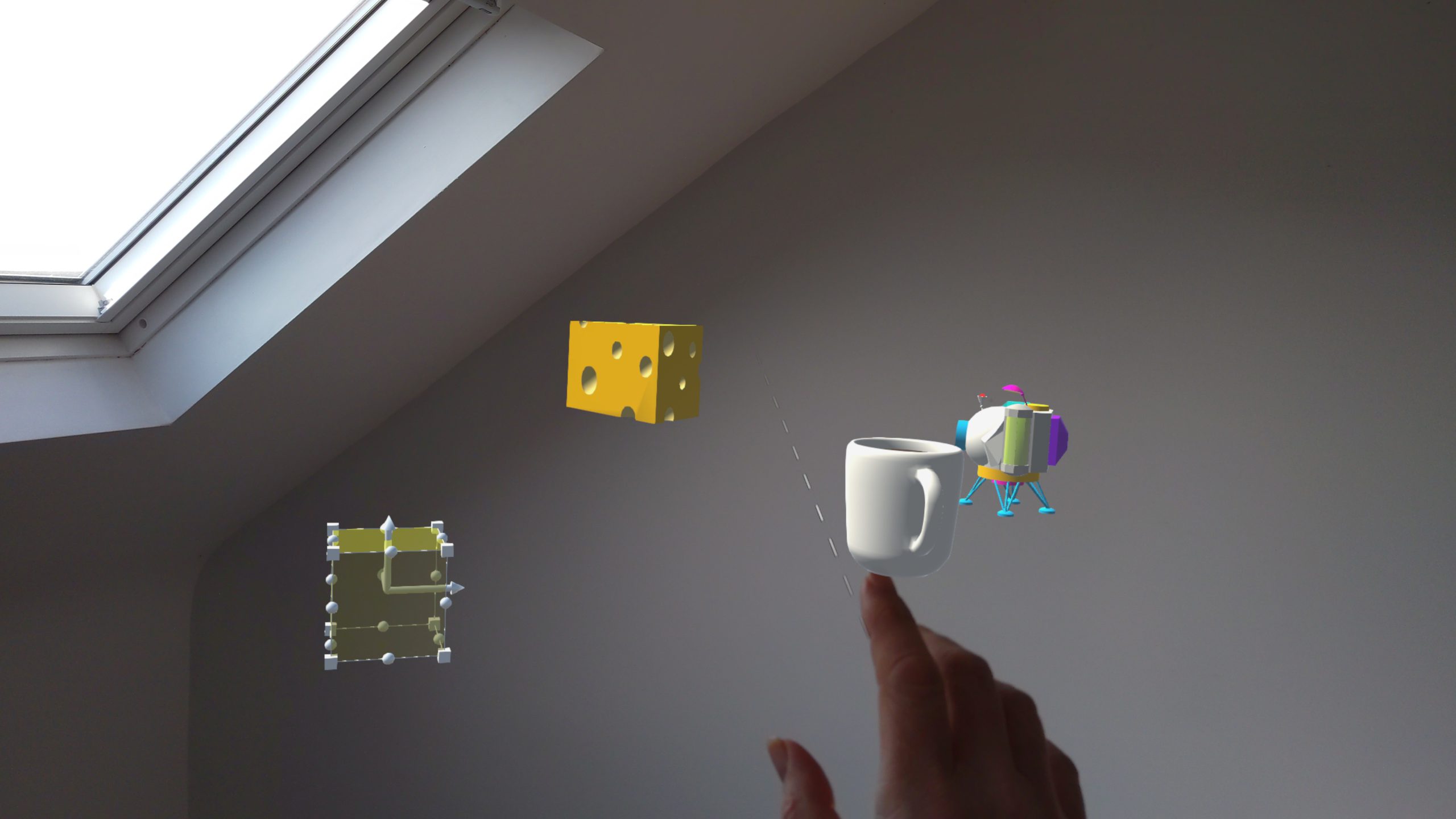

Here’s a video showing the resulting gestures in action:

An alternative to this would be to implement directly in the Unity environment using the KinectGestureRecognizer which I suspect would be better from a perf perspective. The sample code for this project can be downloaded here (please note that some paths appear to be hard-coded in the generated Unity script projects so this will prevent the solution from building without a bit of tweaking).

Comments