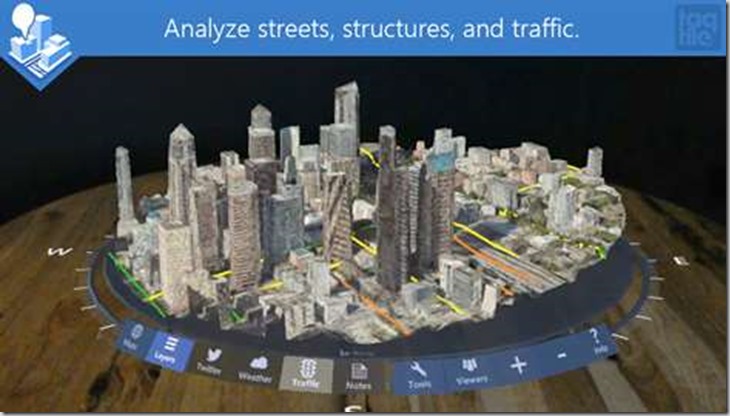

HoloLens & 3D Mapping

This is not specific to HoloLens but I am approaching the problem from that perspective as that is of most interest to me right now. All of the code can be found in a Github repo here. The code and concepts will apply across the board and could be useful in any situation that involves sourcing data around real buildings and representing them in 3D – more specifically in Unity. I imagine this could help with multiple scenarios:

Creating a proof-of-concept which enables real-time data to be overlayed onto or incorporated into 3D map data (this is the scenario that prompted me to investigate)

Providing a starting point for a scene set in a real location

Facilitating a more dynamic fly-through which could update in real-time as you navigate around streets or the globe

This won’t provide an experts view on mapping data as I am not an expert and this seems like a complex area – my previous experience here has been placing some pins on a map for a 2D mobile app! What I can help with though is sharing what I have learned and hopefully helping you with a starting point if like a past me you want to get up and running. I am also not trying to replace or undermine any of the commercial offerings here; instead I used this exploration to aid my own understanding and would reach for those in any commercial setting. By the way, if you make one of these or can recommend a good one for use in HoloLens apps please send a note in the comments and I will be happy to review in another post.

I have tried HoloMaps (which is excellent) but I couldn’t find a public API to the 3D Bing data used to try out

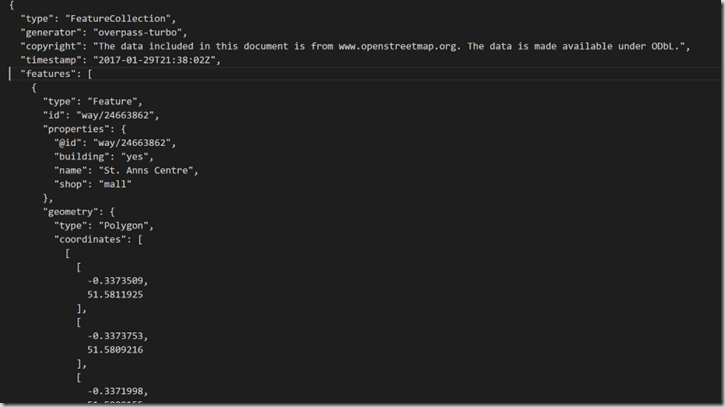

From my initial research I quickly found that there is a JSON internet standard (GeoJSON) used to share geometry data which seemed to be fairly easy to understand and use. I decided to run with that and first build a component in Unity to generate a 3D mesh from GeoJSON; thinking was that I would later be able to find an API or service to retrieve GeoJSON data and plug that in. Since we’ll be using an open standard the hope is that the data source can be switched out for an alternative depending on app requirements.

Rendering GeoJSON in Unity

I started out by getting a GeoJSON sample:

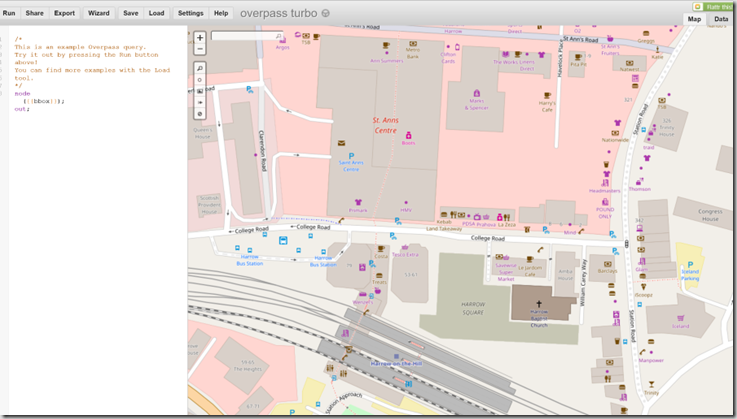

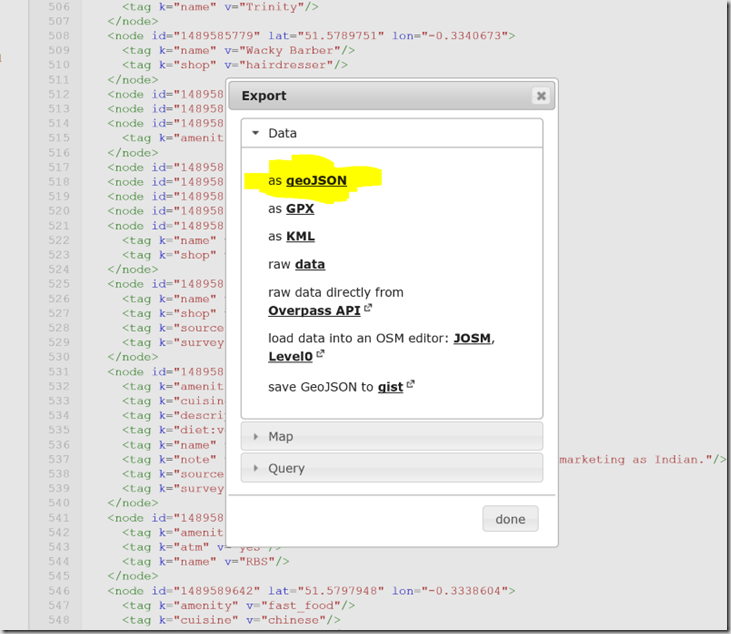

It is possible to use the Open Street Map site here to get data back in the format .osm by specifying a bounding box formed from the latitude and longitude coordinates.

I wanted GeoJSON though and after some further digging I found that you can access OpenStreetMap from the Overpass API which has a tool to facilitate this which uses it’s own query language for requests.

I used the tool to get myself some test data which I used to work out how to render the 3D buildings.

Unity Custom Editor

My initial goal was to create a tool that I could use in the Unity editor to generate the building geometry as opposed to a more dynamic control to let the end user explore a map but I may look into that next. Unity supports custom editors and the approach I took was to create a MonoBehaviour script to be attached to a game object which has an associated custom editor script. These two scripts work together to extend the game object and provide an editor user interface to be able to control how the game object gets extended. First the MonoBehaviour-derived script Then the editor itself (derived from the Editor base class): It’s important to keep in mind that the Editor-derived script is designed to only be run in the Unity editor but the MonoBehaviour is designed to be run in-game and is associated with a Game Object (we don’t want any dependency on the UnityEditor namespace here). I think that this approach makes sense as the plan is to create something that runs in-game ultimately. Given that the input at this stage is a JSON file we need to find a way to get this data into memory, a job I usually reserve for JSON.NET but there seem to be a few challenges getting this to work with Unity (see http://www.what-could-possibly-go-wrong.com/unity-and-nuget/). Instead, I searched around and found fullserializer and decided to give that a try instead. On the whole that decision worked out very well as this seems like a robust and flexible JSON serializer – I did run into this issue though and needed to make some changes to the source code but I was bought in enough to warrant the extra effort.

One inconvenience with using a custom editor is that Unity Coroutines don’t run in this environment as they need an update loop to keep running. It is straight-forward to make use of the EditorApplication.update event in order to provide that update loop but it does need the code to be written. Here’s an example of the type of code needed for this.

In order to keep a clear separation between the MonoBehaviour and the custom editor I used interfaces;

IProgress – allowed calls to update a progress dialog

IUpdateHandler – facilitated hooking a callback to run coroutines from the editor

IDialog – allowed calls to show a dialog box

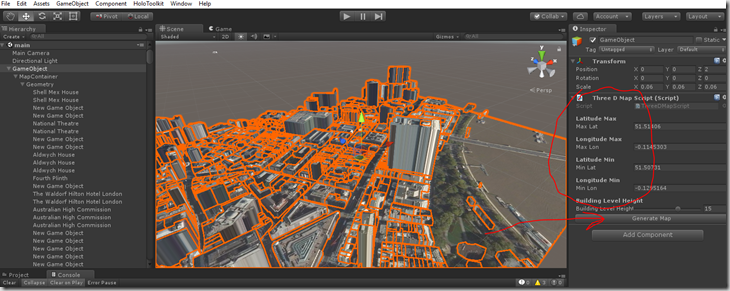

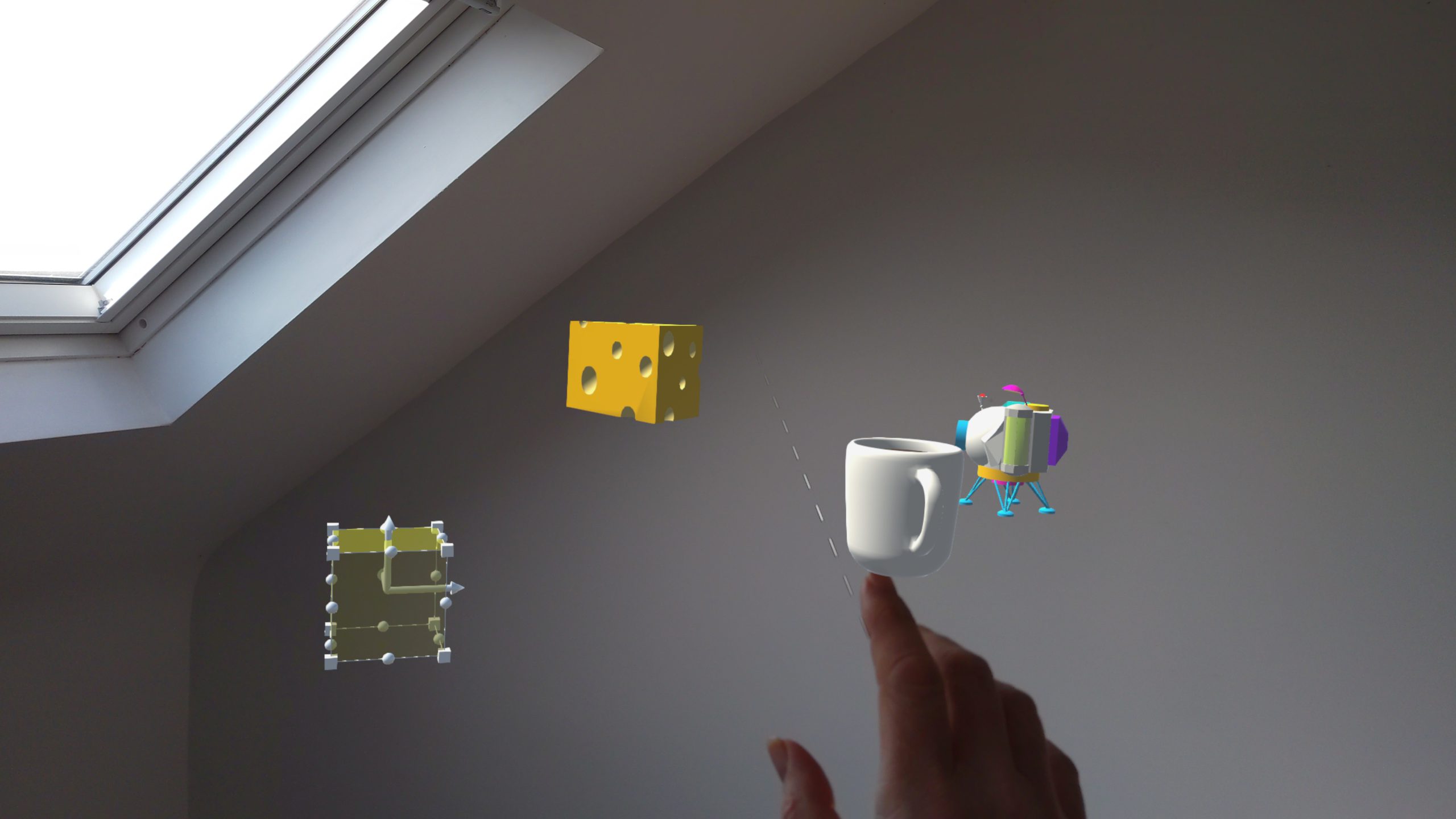

To use you can add an empty GameObject into your scene and then add the ThreeDMapScript as a new component to that GameObject. The custom editor for this component will provide some inputs to allow you to define a bounding box in terms of latitude and longitude. Also, you can specify the height of the levels used for the buildings. This could also be sourced from other data sets so could be a more accurate representation of the building heights.

Once set the Generate Map button will cause the script to call the REST API to retrieve the GeoJSON and the satellite image, generate the meshes and apply the required material. Each building is currently represented by a separate mesh as can be seen in the scene hierarchy window and is named from data in the GeoJSON.

There is a whole load more metadata around the buildings in the data which could be surfaced in an app

Mesh Creation

Once we have the GeoJSON in main memory we need to take the geometry data and convert to a polygonal mesh. The data has a list of ‘features’ in which can be found the buildings each with it’s own geometry defined. A quick scan of the data reveals different types of geometry which is specified as a collection of coordinates given in lat/long. I concentrated my efforts on the ‘polygon’ geometry type and used a polygon triangulator from here to convert the data to a mesh. Running this resulted in 2D polygons which could be extruded to the height of the associated building to give the final form of each building.

The algorithm used here doesn’t support polygons with holes – this could be a future improvement

Here’s some pseudo-code for the creation of the 3D buildings:

FOREACH Building

FOREACH GEOMETRY

Convert Lat/Long to metres

Convert from X/Y plane to X/Z plane

Move Centre of polygon to the origin

Triangulate & extrude

Calculate UV coordinates

Translate back out to original location

Apply material

END

ENDCreate a plane representing the ground tile

Notice that some work is done here to ‘create’ the mesh centred on the origin and then use it’s transform to translate it back into position. Also notice that there are steps to generate UV coordinates and apply a material. This is to enable a satellite image to be textured onto the buildings (more on that later). I found some code here http://stackoverflow.com/questions/12896139/geographic-coordinates-converter which I used to enable the conversion from Lat/Long coordinates to metres.

GeoJSON API

I had made an earlier assumption that GeoJSON data would be easy to get via an API but at this point I’m not so sure as I couldn’t find a free API which provided it. As a result I decided to roll my own API as well. The API I created makes a call to the Overpass API to retrieve data in OSM form and then I used an open source project OSMToGeoJSON.NET to convert the OSM to GeoJSON and return it. I created the API using ASP.NET core and just ran it locally whilst developing out the rest of the project. Here’s a snippet of code from the API which retrieves the GeoJSON from Overpass:

Texture

I decided to explore using some satellite imagery as a texture for the buildings and ground tile plane. I used the Bing Maps static map API to retrieve an aerial satellite image using the same lat/long bounding box as was used in the request for the geometry data. I proxied these calls via my API also and it might make sense to combine them into one call for a bounding box as they naturally need to be called together. The UV texture coordinate calculations turned out to be a little bit more complicated as in order to work out which sub rectangle of the returned image corresponded to the bounding box it was necessary to make another API call to get the associated metadata and then use that in some simple calculations to work out the offsets correctly. Also, I gave no consideration to the texturing of the vertical walls of the buildings and as a result this doesn’t look too good and I wondered if using something like tri-planar texture mapping would help but ultimately the image data for the side of the buildings is missing from the image.

Optimisation

All of the above is non-HoloLens specific but I wanted this to run well on a HoloLens so we need to go a bit further as the HoloLens is essentially a mobile device, with mobile CPU/GPU so we can’t just assume that everything is going to run at 60fps out of the box. In order to track the frame rate at which the device is running we can either use the HoloLens device portal or the FPSDisplay prefab from the HoloToolkit which provides a UI element which stays in your field of view to show the current FPS. I use one of these in the sample in the repo for this project on Github.

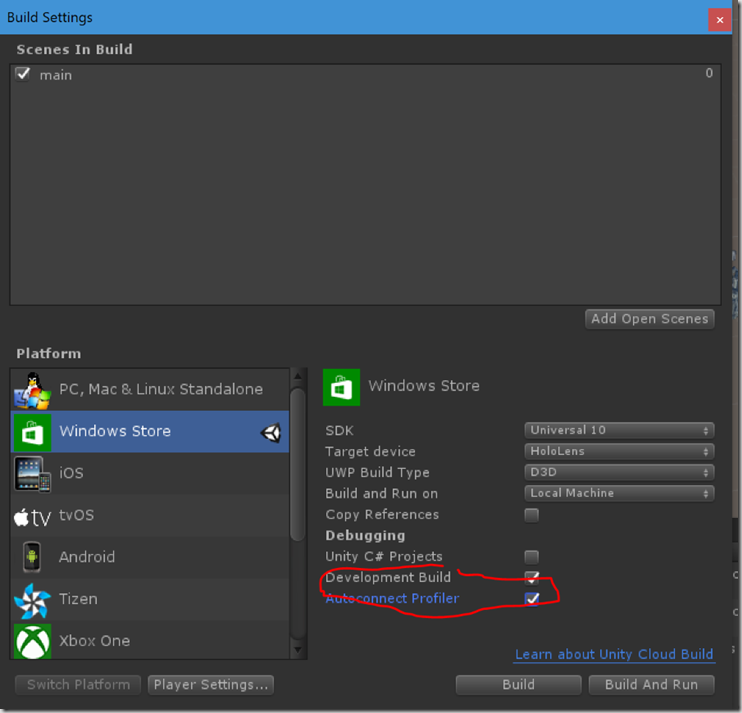

To begin profiling on the HoloLens you need to navigate to the Build Settings in the Unity editor and make sure that the Development Build and Autoconnect Profiler options are both checked.

Autoconnect Profiler will ensure that frames from the start of the run get captured

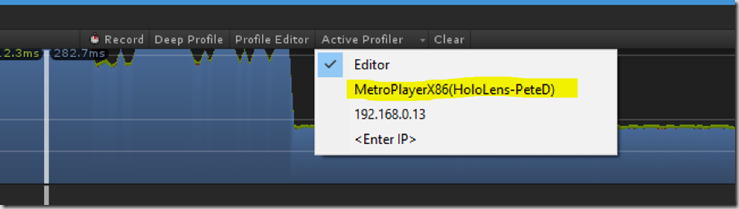

Also, ensure that you have the InternetClient and InternetClientServer options checked in the Player settings. Now if you build and deploy your app to the HoloLens and then open the Unity Profiler window you should get an entry for your device in the active profiler dropdown at the top of the window.

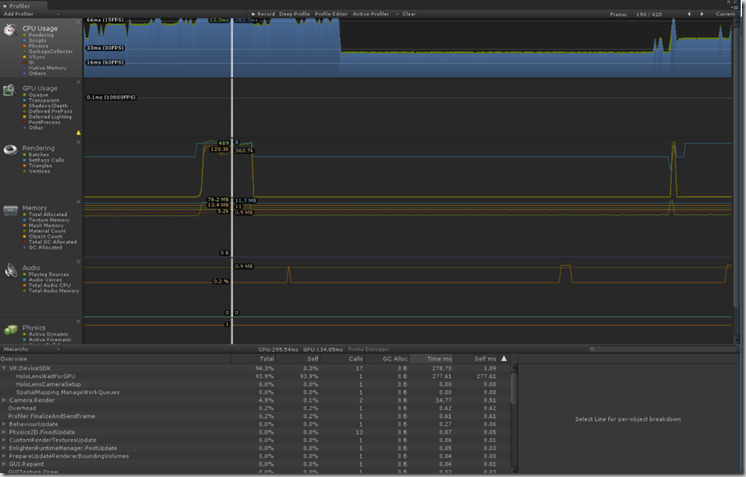

Now if you record you can collect some detailed profile information for each frame:

As you can see from the video below the app is running at a low frame rate – I haven’t given much thought to optimisation at this stage but in a subsequent post I will take a closer look at the data and try to get the app closer to 60 fps.

Comments