Face Tracking – Kinect 4 Windows v2

Since working on the virtual rail project I haven’t had much chance to carry out much in the way of Kinect programming. That all changes now since I ordered a Kinect 4 Windows v2 as I have been hacking away over the last few weeks and wanted to share my experiences here. I don’t intend to cover anything introductory so for that please see the Programming for Kinect for Windows v2 Jumpstart videos here http://channel9.msdn.com/Series/Programming-Kinect-for-Windows-v2 and also the blog series here http://mtaulty.com/CommunityServer/blogs/mike_taultys_blog/archive/tags/Kinect/default.aspx as Mike takes you right from opening the box to getting dancing skeletons and onwards to whichever direction it takes him.

I’m going to ease into this gently with a look into face tracking and I’m going to start out with Managed c# code within a Windows Store application but I suspect that this might change as I learn how best to translate the concepts over to native c++ code. I have been re-learning c++ for a while now and have taken the opportunity also to look into DirectX 11 so I expect a lot of my future samples to go in this direction.

Kinect Sensor

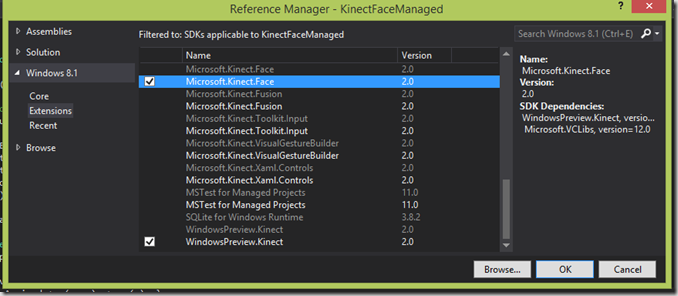

To get the sensor started you will need to install the Kinect for Windows SDK 2.0 Public Preview from here. You will need to have references to two WinRT components; WindowsPreview.Kinect and Microsoft.Kinect.Face. These are distributed as extension SDKs and can be added via the Add Reference dialog under Extensions. Also, you will need to select a processor architecture for the project as “Any CPU” is not supported (x86 leaves the visual studio designer working correctly). Also, you will need to remember to set the Windows Store app capabilities to include microphone and web cam.

For an event-based program the general pattern is get a KinectSensor object, open a reader object for a data stream, subscribe to events for when the data arrives and finally call Open on the sensor object. Here is a very simple example:

For an event-based program the general pattern is get a KinectSensor object, open a reader object for a data stream, subscribe to events for when the data arrives and finally call Open on the sensor object. Here is a very simple example:

- private KinectSensor _kinect = KinectSensor.Default;

- private void StartKinect()

- {

- // Open Kinect-stream

- _kinect.Open();

- // Open readers

- _colorReader = _kinect.ColorFrameSource.OpenReader();

- // Hook-up frame arrived events

- _colorReader.FrameArrived += OnColorFrameArrivedHandler;

- }

- private void OnColorFrameArrivedHandler(object sender, ColorFrameArrivedEventArgs e)

- {

- // Retrieve frame reference

- ColorFrameReference colorRef = e.FrameReference;

- // Acquire the color frame

- using (ColorFrame colorFrame = colorRef.AcquireFrame())

- {

- // Check if frame is still valid

- if (colorFrame == null) return;

- // Process Color-frame

- }

- }

Reactive Extensions

For me, Reactive Extensions is a natural fit for Kinect programming in an event driven application as it removes the need to think about the mechanics of how to compose and orchestrate the associated event streams. A similar example to that above but using Rx shows how to subscribe to an Observable and shows how to specify which threading context the subscription and event delivery should occur on.

- private KinectSensor _kinect = KinectSensor.Default;

- private ColorFrameReader _colorReader;

- private void StartKinect()

- {

- // Open Kinect-stream

- _kinect.Open();

- // Open readers

- _colorReader = _kinect.ColorFrameSource.OpenReader();

- var colorFrames = Observable.FromEvent<ColorFrameArrivedEventArgs>(

- ev => { _colorReader.FrameArrived += (s, e) => ev(e); },

- ev => { _colorReader.FrameArrived -= (s, e) => ev(e); })

- .SubscribeOn(Scheduler.TaskPool)

- .ObserveOn(Scheduler.TaskPool);

- IDisposable disp = colorFrames.Subscribe(OnColorFrame);

- }

- private void OnColorFrame(ColorFrameArrivedEventArgs e)

- {

- // Retrieve frame reference

- ColorFrameReference colorRef = e.FrameReference;

- // Acquire the color frame

- using (ColorFrame colorFrame = colorRef.AcquireFrame())

- {

- // Check if frame is still valid

- if (colorFrame == null) return;

- // Process Color-frame

- }

- }

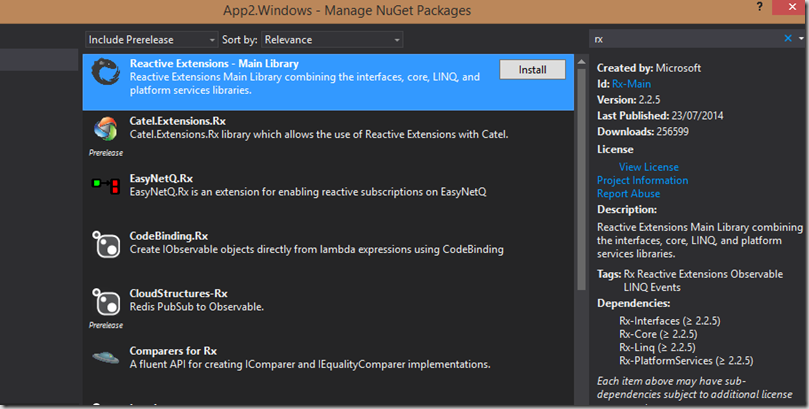

Rx is available as a nuget package – so install into your app and you should be good to go:

Face Detection

It is necessary to use the tracking ID of the body to start the face tracking and also I will need to get colour frames (so we can see the face). As a result I will use a MultiSourceFrameReader which I will ask to deliver body and colour frames in the same event (at the same time). The body data will contain the skeletons in the camera’s view but we just require to get a tracking ID from he body data which is required for face tracking. The colour frames will be copied into a WriteableBitmap for display.

So with the preparation work done we need to reference the Face library

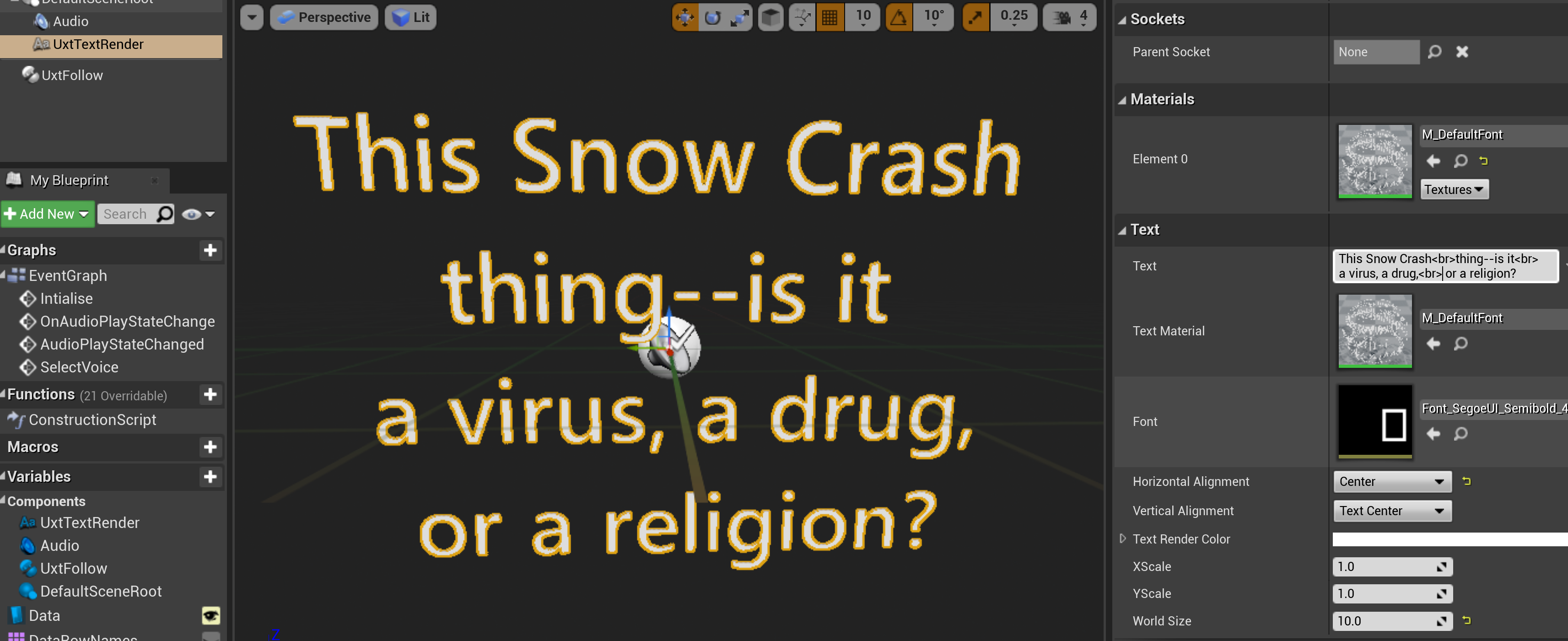

- _frameSource = new FaceFrameSource(_kinect, 0, FaceFrameFeatures.BoundingBoxInColorSpace | FaceFrameFeatures.FaceEngagement | FaceFrameFeatures.Happy);

- _faceFrameReader = _frameSource.OpenReader();

- var faceFrames = Observable.FromEvent<FaceFrameArrivedEventArgs>(

- ev => { _faceFrameReader.FrameArrived += (s, e) => ev(e); },

- ev => { _faceFrameReader.FrameArrived -= (s, e) => ev(e); })

- .SubscribeOn(Scheduler.TaskPool)

- .ObserveOn(Dispatcher);

- _faceFrameSubscription = faceFrames.Subscribe(OnFaceFrames);

Using a similar pattern to the multi-frame reader we can subscribe to events on a FaceFrameSource object and specify the data we are interested in. I have subscribed for a face bounding box, whether the face is ‘engaged’ with the Kinect and whether the face is happy or not.

Now the general pattern for the event handlers is as follows:

- // using pattern to dispose frames as soon as possible

- using (var frame = args.FrameReference.AcquireFrame())

- {

- if (frame != null)

- {

- // Copy required data

- }

- }

- // Schedule further processing..

so we hang onto the frame for as short a time period as possible.

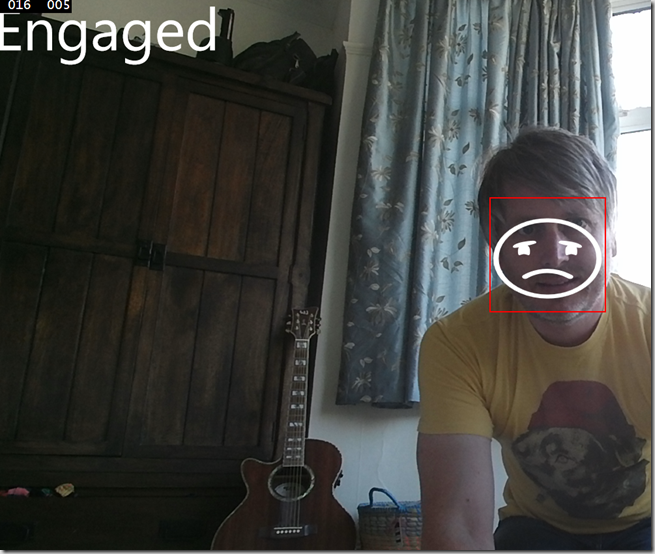

For the Face frame handler I will hook up the data for the bounding box to a display rectangle and whether the face is happy and/or engaged with the camera will be represented by some simple ui controls.

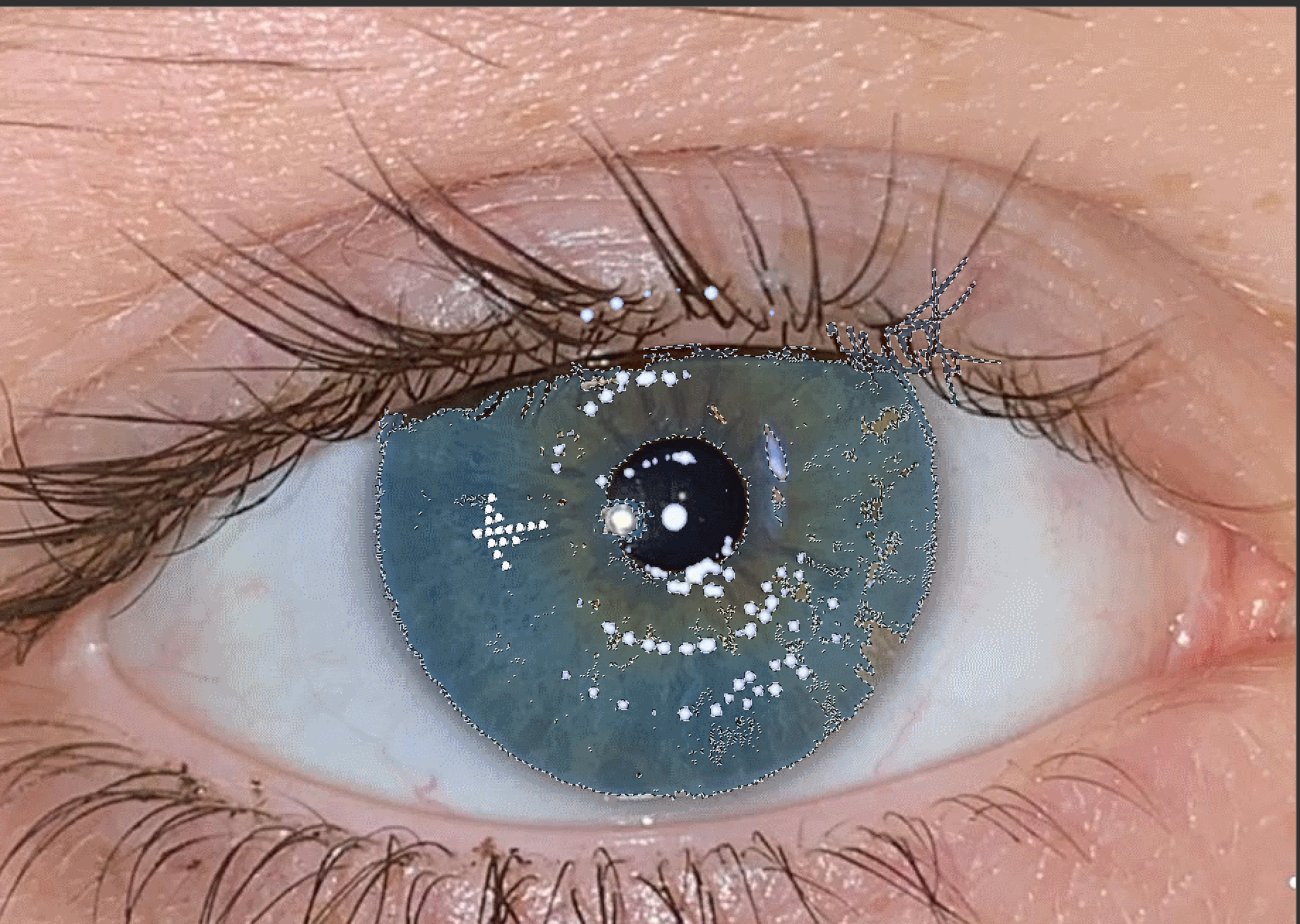

Here’s a screenshot of the resulting sample running:

Sample project can be downloaded here http://1drv.ms/1uJ9bFV

Comments